When Not to Use AI: Deterministic Workflows in Production Systems

AI is everywhere. Too everywhere. From answering "hi" to deciding how your production platform runs sanity checks, we've quietly crossed a line. Not because AI can't do these things, but because it shouldn't do all of them.

This post is not anti-AI. Quite the opposite. It's about using AI where it creates leverage, and removing it where it quietly burns money, reliability, and trust.

Is AI Needed for Everything?

Why Determinism Still Wins in Production Systems

I recently saw a meme: two young people "talking" to each other, but every response is generated by AI. It's funny. Then it's awkward. Then it's uncomfortable.

Because the joke hides a real question:

If we outsource even basic interaction to AI... what else are we delegating without thinking?

And in tech, especially in platforms, DevOps, and production systems, that question matters a lot.

The Problem Isn't AI. It's Where We Put It.

Let's be clear: AI is amazing at ambiguity.

- Natural language

- Exploration

- Reasoning with incomplete context

- Translating intent into action

But production systems are not ambiguous. They are built on expectations.

- Health checks must be consistent

- Sanity tests must be repeatable

- Compliance workflows must be predictable

- Outcomes must be explainable

When we mix those two worlds carelessly, we get something dangerous: non-deterministic logic running deterministic systems.

A Real Example: "Run Full Platform Sanity"

I've seen teams implement AI-driven solutions for things like:

- Platform health checks

- Full sanity runs

- Operational diagnostics

The UX looks great:

"Hey AI, run a full sanity of my platform."

Much better than clicking through a complex UI, right?

Yes. But let's look under the hood.

What Actually Happens

-

The LLM interprets the request

-

It decides which tools to call

-

It invokes them one by one

-

Each call:

- Consumes tokens

- Adds latency

- Introduces uncertainty

Same request. Different day. Different internal reasoning path.

Same intent, potentially different execution.

That's not intelligence. That's a token-powered slot machine 🎰.

The Token Black Hole No One Talks About

From a business perspective, this matters.

Simple operational tasks:

- Are repeated often

- Have stable logic

- Produce the same expected result every time

Using an LLM for these tasks means:

- Paying token costs for zero new information

- Debugging behavior that should never change

- Accepting variability where none should exist

Executives see this as cost leakage. Architects see it as system fragility. Engineers feel it as unnecessary complexity.

What is your takeaway here?

The Wrong Question We Keep Asking

Most teams ask:

"Can AI do this?"

The better questions are:

- Should AI do this?

- What part of this needs intelligence?

- What part should be guaranteed?

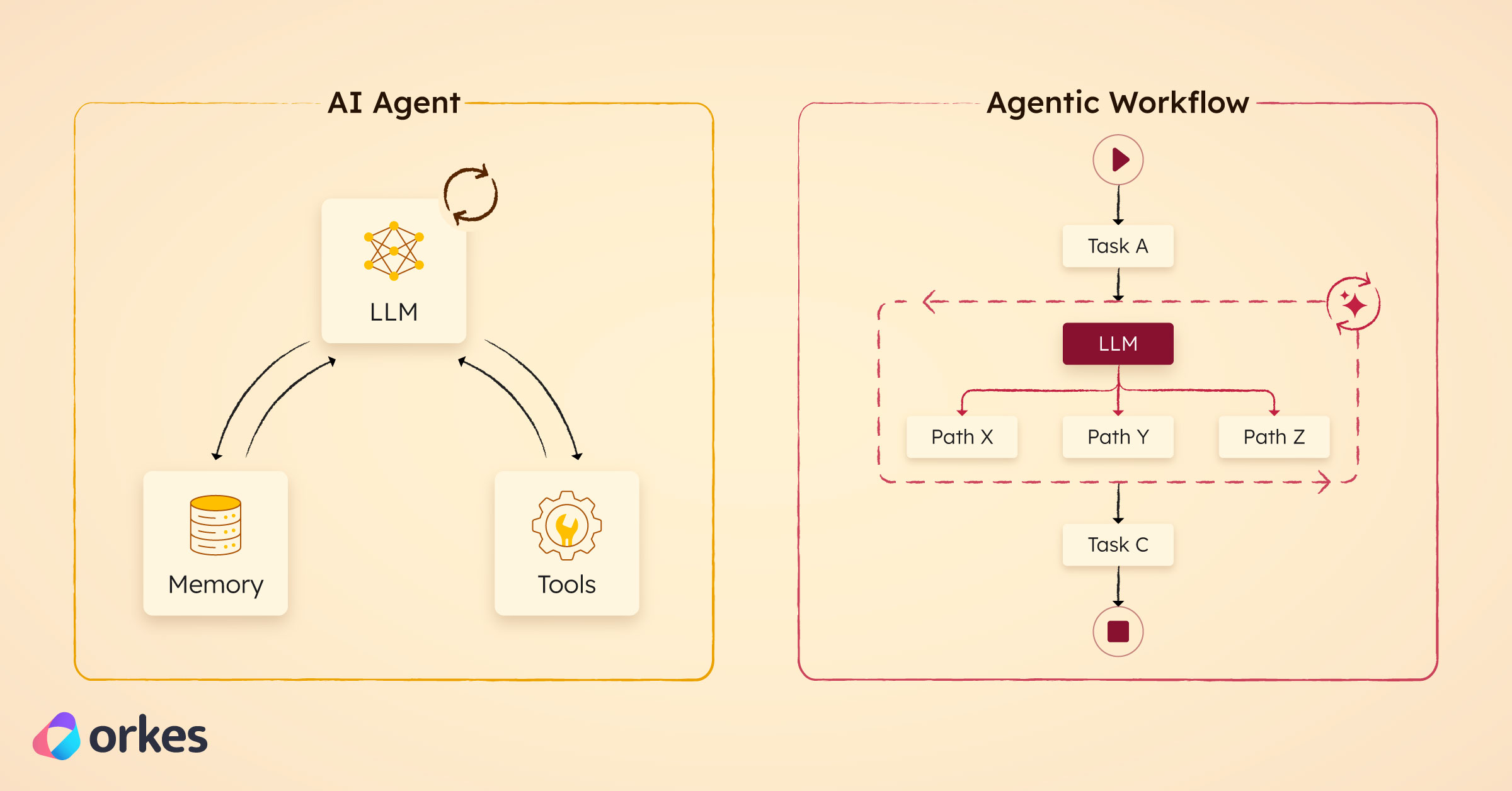

Because the truth is: AI is not a workflow engine.

Deterministic Workflows Are Not Anti-AI

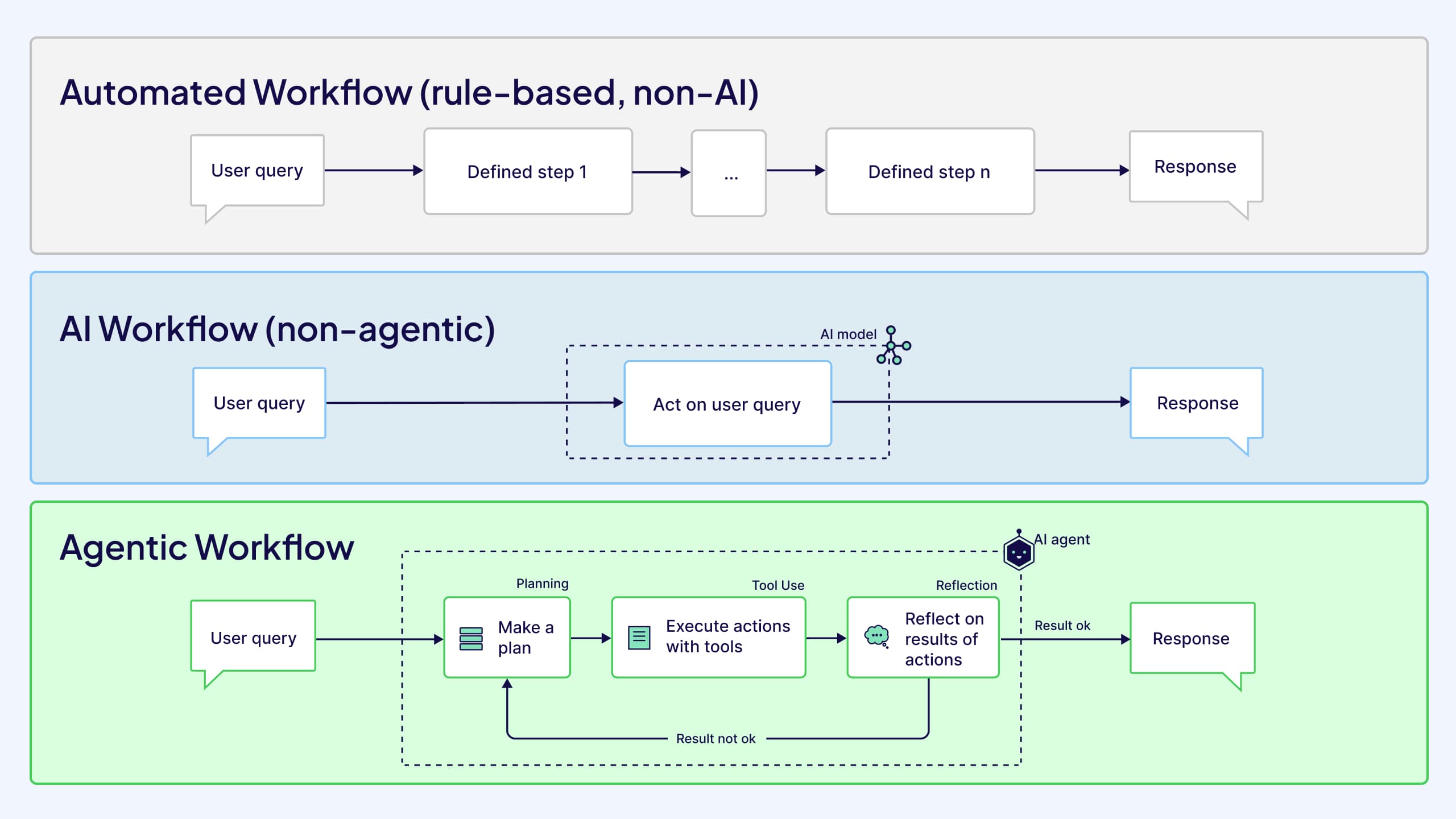

Here's the mental shift that changes everything:

AI should decide what to do. Deterministic systems should decide how it's done.

This is not a compromise. It's an optimization.

AI does:

- Intent understanding

- Decision making

- Tool selection at a high level

Deterministic systems do:

- Execution

- Sequencing

- Error handling

- Consistent outcomes

From N Tools to One Outcome

Let's simplify.

The naïve AI-first approach

-

Expose N tools to the LLM

-

Let it figure out:

- Order

- Dependencies

- Retries

- Failure modes

The smarter hybrid approach

-

Map the workflow once

-

Make it deterministic

-

Expose one tool:

"Run full platform sanity"

Same result. Lower cost. Higher reliability.

This Is Where Standards Quietly Save Us

This approach works because we already have the right building blocks.

Deterministic workflows

Defined once, versioned, testable.

That's where Arazzo comes in. Arazzo lets you describe workflows declaratively, no prompts, no guessing.

Execution engine

Those workflows need an orchestrator.

That's the role of OrcA:

- Executes deterministic workflows

- Handles sequencing and retries

- Guarantees consistent outcomes

Capabilities as APIs

Your system capabilities already exist.

They are defined using the OpenAPI Specification (OAS).

Exposing outcomes to AI

Now the key step: Expose workflows, not raw operations, as AI tools.

That's exactly what HAPI MCP enables.

The Mapping That Changes Everything

Once you see it, it's hard to unsee:

-

Arazzo → OrcA Deterministic workflows, executed reliably

-

OAS → HAPI MCP APIs and workflows exposed as MCP tools

The LLM doesn't need to know how the sanity check runs. It only needs to know that it exists.

What This Unlocks (For Everyone)

For AI systems

- Fewer tools to reason about

- Cleaner context

- Lower token usage

- Higher accuracy

For Developers

- Predictable behavior

- Reusable workflows

- Easier testing

- No prompt gymnastics

For Product Managers

- Features with guarantees

- Stable UX

- Clear ownership of logic

For Executives

- Controlled AI spend

- Reduced operational risk

- Compliance-friendly AI adoption

AI + Determinism Is Collaboration, Not Replacement

This is the part most people miss.

We are not replacing humans with AI. And we are not replacing systems with AI either.

We are connecting intent to execution more intelligently.

Think of it this way:

- AI is the copilot

- Deterministic workflows are the autopilot

- Humans still decide where the plane goes

The Real Future of AI in Platforms

The future is not:

"AI does everything."

The future is:

"AI knows when not to."

If a task:

- Must be consistent

- Must be repeatable

- Must be auditable

Then AI should trigger it, not perform it.

Final Thought

That meme about AI answering "hi" is funny because it's absurd. But the same absurdity exists in production systems, just quieter and more expensive.

Expose outcomes, not chaos. Let AI reason. Let systems execute.

If your AI needs to reason about execution, your system design is already leaking. AI should reason about intent, not rebuild your workflows one token at a time.

Thanks for reading. Be HAPI and Go Rebels! ✊🏼

FAQ - Common Questions

When Should AI Not Be Used?

AI is powerful, but power without boundaries becomes a liability.

AI should not be used when a task:

- Must produce the same result every time

- Is executed frequently at scale

- Has clear, predefined logic

- Requires auditability or compliance

- Impacts production stability

These are not edge cases. These are core platform operations.

Health checks, sanity runs, compliance validations, and operational diagnostics do not benefit from probabilistic reasoning. They benefit from guarantees.

Using AI in these scenarios introduces:

- Non-deterministic behavior

- Higher operational cost

- Hard-to-reproduce failures

- Loss of trust in the system

AI excels at deciding what to do. It struggles when asked to repeat the same thing perfectly.

That's the line most teams cross without noticing.

Why Deterministic Workflows Matter in Production Systems?

Deterministic workflows are not a legacy concept. They are a production necessity.

A deterministic workflow guarantees:

- The same inputs lead to the same outputs

- Execution order is fixed and explainable

- Failures are reproducible

- Behavior can be tested, versioned, and audited

This is why deterministic workflows matter, especially in platforms, DevOps, and enterprise systems.

When AI replaces deterministic execution, you lose:

- Predictability

- Cost control

- Debuggability

- Confidence in outcomes

In production, consistency is not optional. It is the foundation of reliability.

AI-generated execution paths might work today and fail tomorrow, without any code changes. That's not innovation. That's operational risk disguised as intelligence.

How to Combine AI and Deterministic Systems (The Right Way)?

The most effective systems today are not "AI-first" or "AI-everywhere."

They are hybrid by design.

Here's the winning pattern:

- AI handles intent and ambiguity

- Deterministic systems handle execution

AI interprets what the user wants. Deterministic workflows define how it happens.

This separation creates leverage:

- AI remains flexible and expressive

- Execution remains predictable and cost-efficient

Instead of exposing dozens of low-level tools to an LLM, you expose one deterministic outcome.

The AI triggers it. The system guarantees it.

This is how you combine AI and deterministic systems without sacrificing reliability, or burning tokens unnecessarily.

Why OpenAPI Workflow Orchestration Is a Game Changer in AI Systems?

Most systems already have what they need. They just expose it incorrectly.

APIs describe capabilities. Workflows describe outcomes.

With OpenAPI workflow orchestration, you move from raw operations to structured execution.

Here's how it works in practice:

- OpenAPI defines what your system can do

- Workflow specifications define how operations are combined

- Orchestrators execute those workflows deterministically

- AI tools expose workflows, not individual endpoints

This approach transforms APIs into reliable building blocks for AI systems.

Instead of asking an LLM to guess:

- Which endpoint to call

- In what order

- With which retries

You give it a single, well-defined tool:

"Run full platform sanity"

Same result. Every time.

That's the difference between AI-driven chaos and OpenAPI workflow orchestration done right.

Why AI can't Replace Deterministic Execution in Production Systems?

AI is a powerful tool for interpreting intent and handling ambiguity. However, it fundamentally lacks the guarantees required for deterministic execution in production systems.

Deterministic execution demands:

- Consistency: The same inputs must always yield the same outputs.

- Predictability: Execution paths must be clear and explainable.

- Auditability: Actions must be traceable and verifiable.

- Reliability: Systems must behave consistently under all conditions.

AI models, by their nature, introduce variability. Their outputs can change based on context, training data, and even random seed values. This unpredictability is incompatible with the stringent requirements of production systems.

How Does HAPI MCP Facilitate Deterministic Workflows for AI Systems?

HAPI MCP (Model Context Protocol) is not just another AI tool. It's a bridge between AI intent and deterministic execution.

HAPI MCP enables you to:

- Expose deterministic workflows as MCP tools

- Let AI models discover and invoke these workflows seamlessly

- Maintain clear separation between intent and execution

- Reduce token consumption by minimizing AI reasoning about execution details

- Ensure consistent, repeatable outcomes in production systems

By using HAPI MCP, you empower AI systems to leverage the reliability of deterministic workflows without losing the flexibility of AI-driven intent.

Ready to make your AI systems more reliable and cost-efficient?